With the 2018 hurricane season just weeks away, the independent not-for-profit Center for Robot-Assisted Search and Rescue (CRASAR) announced the first ever Disaster Robotics Awards. Awards were announced for the 2018 Ground, Aerial, and Marine Rescue Robots of the Year on April 14, 2018 during a National Robotics Week ceremony at SEAD Gallery in Bryan, TX.

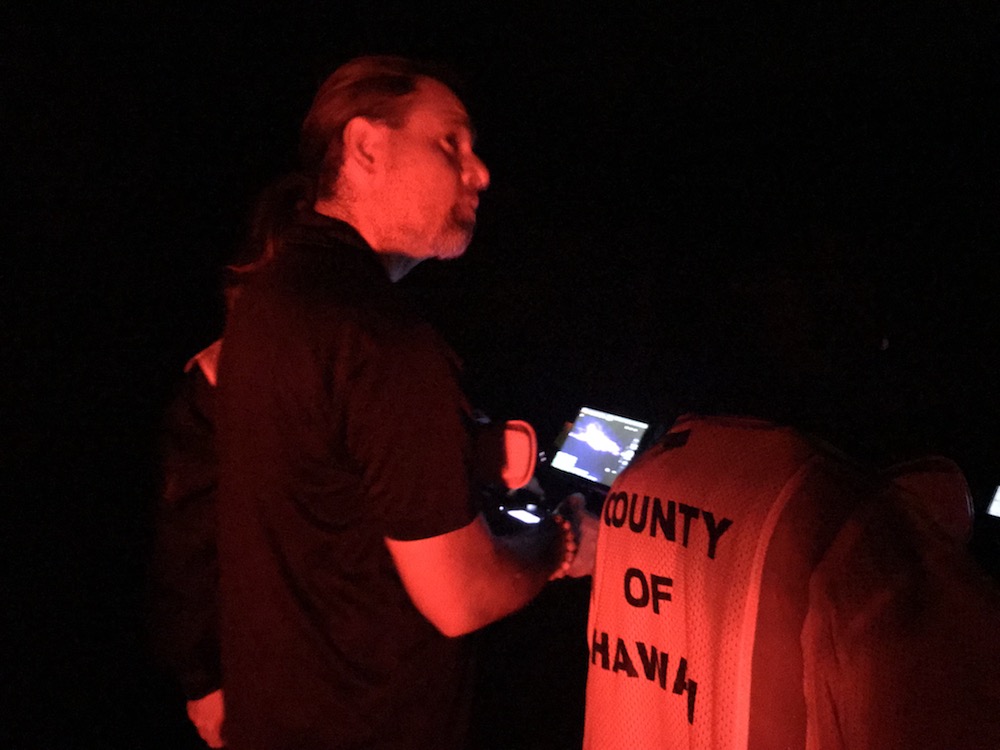

CRASAR’s 2018 Aerial Rescue Robot of the Year is the DJI Mavic Pro. With a price tag of $1,000, experts found this drone was able to do everything they needed. Because batteries are expensive, the low price and the ability to recharge using a car charger are particularly important, as is the stabilizing camera with pan and tilt. The review team also noted DJI Mavic Pro’s first-person view, as well as programmed views provide the option of broad, NASA-style tiled mapping if needed. Most importantly, DJI Mavic proved itself a workhorse among responders and quickly became the tool of choice, flying 78 of the 112 flights during CRASAR’s Hurricane Harvey response and just weeks later, 247 of 247 flights when they responded to Hurricane Irma.

Another workhorse among the 2018 honorees is the 2018 Marine Rescue Robot of the Year, Hydronalix EMILY. Among the most used by lifeguards worldwide, this water-based robot boat has the ability to run rescue lines to people in distress and the capacity for five to eight people to hang on while being towed to safety. These features have made surfboard-sized EMILY a favorite among lifeguards in both routine and large-scale water rescues, including refugee rescues along the migrant route in the Aegean Sea.

A lesser known but very promising terrestrial robot captured the win as the 2018 Ground Rescue Robot of the Year. Carnegie Melon University’s aptly named Snakebot can propel itself into the smallest of spaces, allowing rescuers to search for signs of life where dogs and people cannot reach. The 2017 Mexico City earthquake marked the first use of Snakebot in the response phase of a disaster operation.

“These robots enable life-saving decision making for responders and emergency managers,” Murphy says. “Rescue decisions and critical infrastructure decisions during that response phase are made very rapidly based on the best available information at the time and these robots, well-deployed with the right teams of operators and experts, are getting key information to decision makers so they can save lives and efficiently manage risk.”

Spearheaded by internationally renowned Disaster Roboticist Dr. Robin Murphy, the Disaster Robotics Awards were created to raise awareness of the role and value of robots for disaster response and help prepare responders to put these resources to work in both routine response and disasters.

“The goal of the Disaster Robotics Awards is twofold,” Murphy explains. “One, we are letting people know what’s working and why. Two, we want to get people thinking about the next disaster.”

The ceremony also included awards and acknowledgements. Educator of the Year went to David Merrick at the FSU Center for Disaster Risk Policy, for his work in developing and offering classes in small UAS aimed specifically for the needs of emergency professionals. One of the courses Is now available online at https://tinyurl.com/suas-course. Agency Partner of the Year went to Fort Bend County Office of Emergency Management for partnership on the Hurricane Harvey preparations, response, and recovery activities. The Service Award was awarded to Justin Adams who coordinated CRASAR operations as well as served as the Air Operations Chief for manned and unmanned operations for Fort Bend County during Harvey, then was CRASAR operations for Hurricane Irma, and deployed a third time to Hurricane Maria for three weeks.

Hurricane season begins June 1 and experts are anticipating a strong season of storms again in 2018. Murphy and her colleagues based the 2018 Disaster Robotics Awards on what robots worked well for responders over the last year. The CRASAR team documented robot use in response to hurricanes Harvey, Irma, and Maria, the Mexico City earthquake, and water rescues of Syrian boat refugees and elsewhere, gathering data regarding number, duration and nature of deployment. With these insights into what robots work best for responder, the 2018 honorees were selected.

While the awards recognize the contribution of robots in disaster response over the last year, CRASAR’s insights into the value of and best practices for robots in disaster response date back to the first use of robots in disaster response at the World Trade Center in the aftermath of the September 11th attacks. Murphy was on hand in New York and has been deploying robots in disaster response globally ever since. Through her work with both CRASAR and the Humanitarian Robotics and AI Laboratory in Texas A&M University’s Computer Science and Engineering Department, Murphy and colleagues continue to promote best practices and offer support to responders seeking the benefits of disaster robots.